Artificial intelligence (AI) is everywhere these days, and the landscape is changing rapidly and regularly. Educators are seeing potential benefits, but the downside is also clear as reports of classroom cheating with AI skyrocket. Developers are investing billions of dollars in creating systems that report factually accurate information, avoid bias, and protect user data. That’s great, but schools also need to take proactive steps to mitigate educational risks themselves. Read on for six ways you can manage the risks of AI in schools.

1. Co-develop policies and practices around AI.

Collaborate with all stakeholders to create ethical guidelines and practices that promote responsible AI use and reflect the values of your school community. Make sure your policies take equity and accountability into consideration and provide for training and awareness programs, data privacy and security, and continuous review and adaptation.

2. Co-create norms around ethical use of AI.

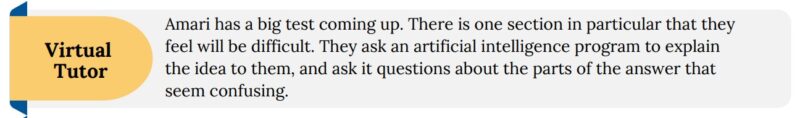

Engaging students in the development of norms and guidelines for AI increases compliance and helps them construct their own understanding of when and why AI tools should be used. Try discussing specific scenarios to foster independent student application of ethical principles and rich discussions. Students can rate each scenario from “very unacceptable” to “very acceptable” and explain their reasoning. Here’s an example (get more in the free guide):

3. Select your AI tools carefully.

Some AI systems are designed specifically for use in school. Tools like Stretch, a new chatbot created by the International Society for Technology in Education (ISTE) and the Association for Supervision and Curriculum Development (ASCD), is a large language model trained only on materials created or vetted by these two organizations. That’s not to say it’s perfect, but the hope is that curated, high-quality inputs will improve the quality and appropriateness of AI outputs.

4. Balance AI-powered assessments with analog ones.

Prioritize comprehensive evaluation of students’ skills and knowledge. Instead of allowing students to complete assessments electronically, you can have them write their answers by hand, in class, to ensure that their responses accurately reflect what they can do independent of artificial assistance. Assessments where students verbally report on what they know can also be useful.

5. Implement AI detection services.

AI detection services are very helpful in mitigating instances of academic dishonesty. However, it’s important to recognize that all AI-detection technologies generate both false negatives and false positives. Students who generate answers using AI may be credited as having produced it themselves, and students who write papers for themselves may be falsely identified as having used AI services.

6. Assign authentic projects that encourage the appropriate use of AI.

Traditional assessments may be more susceptible to inappropriate use of AI tools. One way to avoid this is to have students use their skills and understandings to address real concerns in authentic contexts. For example, if students apply their learning toward the resolution of an issue in their community, teachers can encourage the use of AI tools (it’s appropriate here!). Authentic projects might require students to brainstorm with an AI chatbot before choosing the best idea, or they might include having an AI system draft a letter that students then work together to revise and edit. Here’s another example of a shift (get more in the free guide):

Interested in learning more about navigating AI in schools? Get the complete free guide from VAI Education for more on understanding AI, potential drawbacks, how AI can help teachers, and more.